Shaping the maneuver analysis experience for the U.S. Department of Defense. Reimagining how pilots review flights with AI, giving instructors real-time insight into mission readiness.

Augmenting flight debriefs

This project supported the U.S. Air Force operating under the Department of Defense, where pilot performance and mission readiness have long been evaluated through instructor-led post-flight reviews.

The Air Force has a proud multigenerational tradition of aviation excellence, yet many of its training systems were built for a previous era relying on traditional debrief methods, aging hardware, and legacy software not originally designed for artificial intelligence or long-term performance tracking.

So despite the U.S. Air Force’s ability to project strength, the methods used to assess pilot performance weren't evolving at the same pace as what modern technologies could provide.

Every flight generates millions of data points capturing maneuvers, reactions, and situational decisions in real time. However, this data gets erased after the end of the debrief leaving any remaining insights scattered in personal notes. This made it difficult for instructors and pilots to understand pilot performance, track progress over time, or use the insights to improve safety and skills.

With pilots balancing intense schedules and having to meet high standards, squadrons needed a way to turn complex data into an enhanced learning experience that supports teamwork, integrates within their current workflow, and helps pilots continuously improve their performance.

"While we spend hours analyzing and debriefing each training mission to maximize learning, our instructors are very time-limited and can only record some of the subjective data to summarize a student's performance."

Bridging the gap between people and technology

While Data Driven Readiness demonstrated potential for insights into flight data, the platform was hard to use and its assessments lacked transparency making them untrustworthy to pilots and instructors. Pilots struggled to understand their performance and instructors lacked the ability to provide feedback which limited collaboration.

Pilots couldn’t see when or where AI was being used at, leaving them uncertain on the validity of insights generated and questioning how much they can trust AI-generated feedback.

This lack of transparency limited product adoption and undermined the confidence in the platform’s recommendations. Pilots still saw the potential of AI to highlight opportunities for improvement, but they couldn’t trust the system.

Building trust with AI

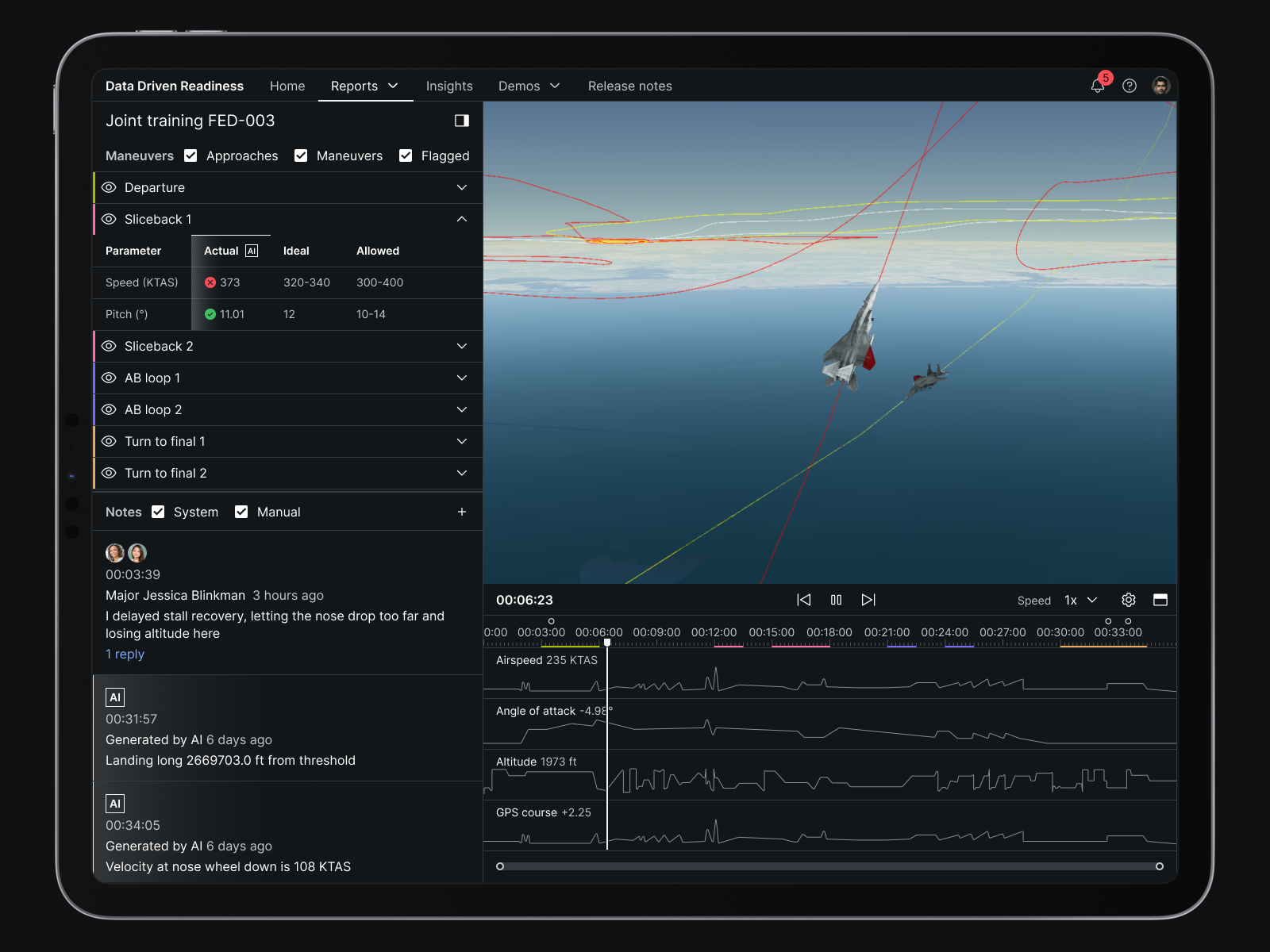

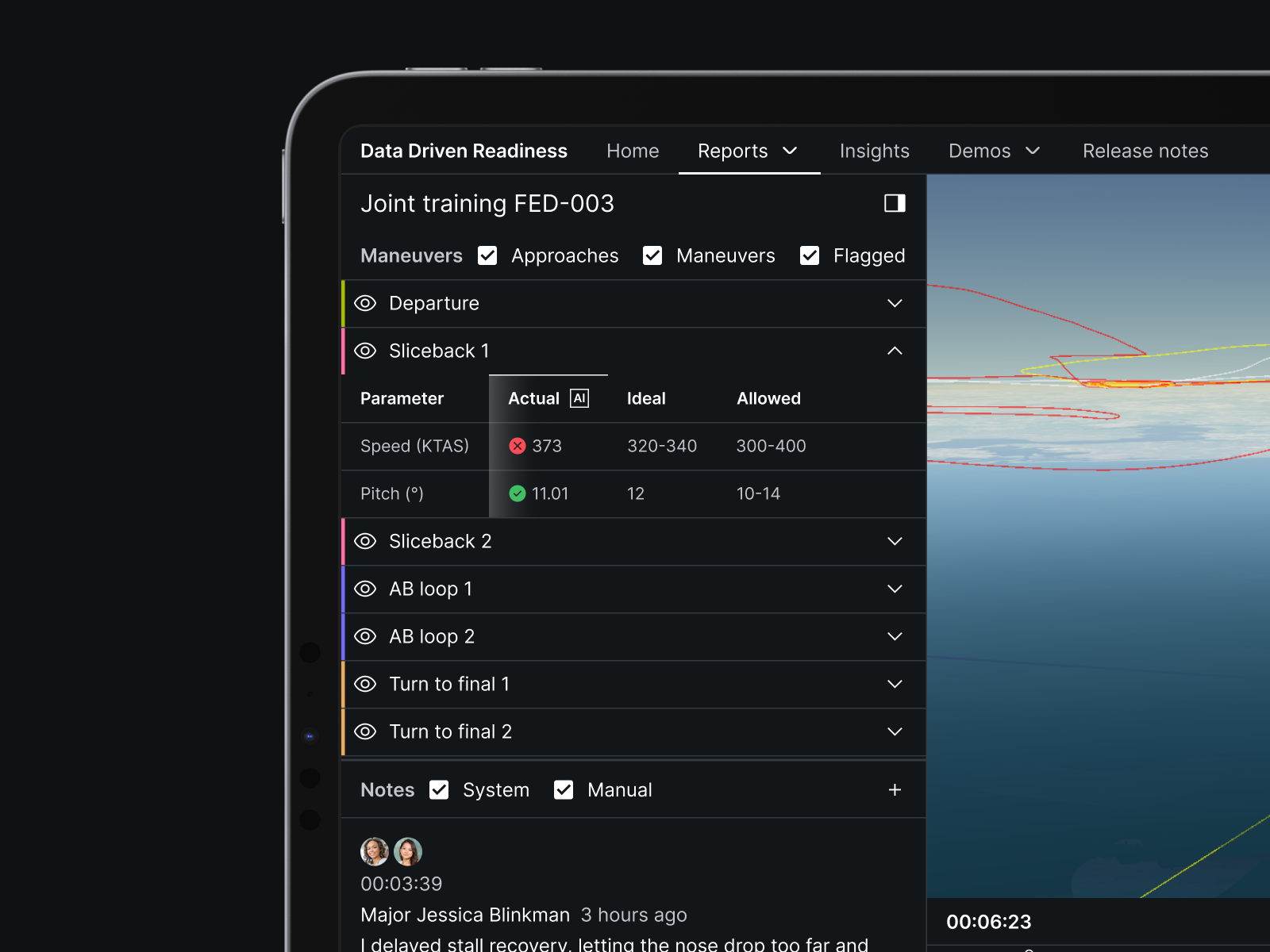

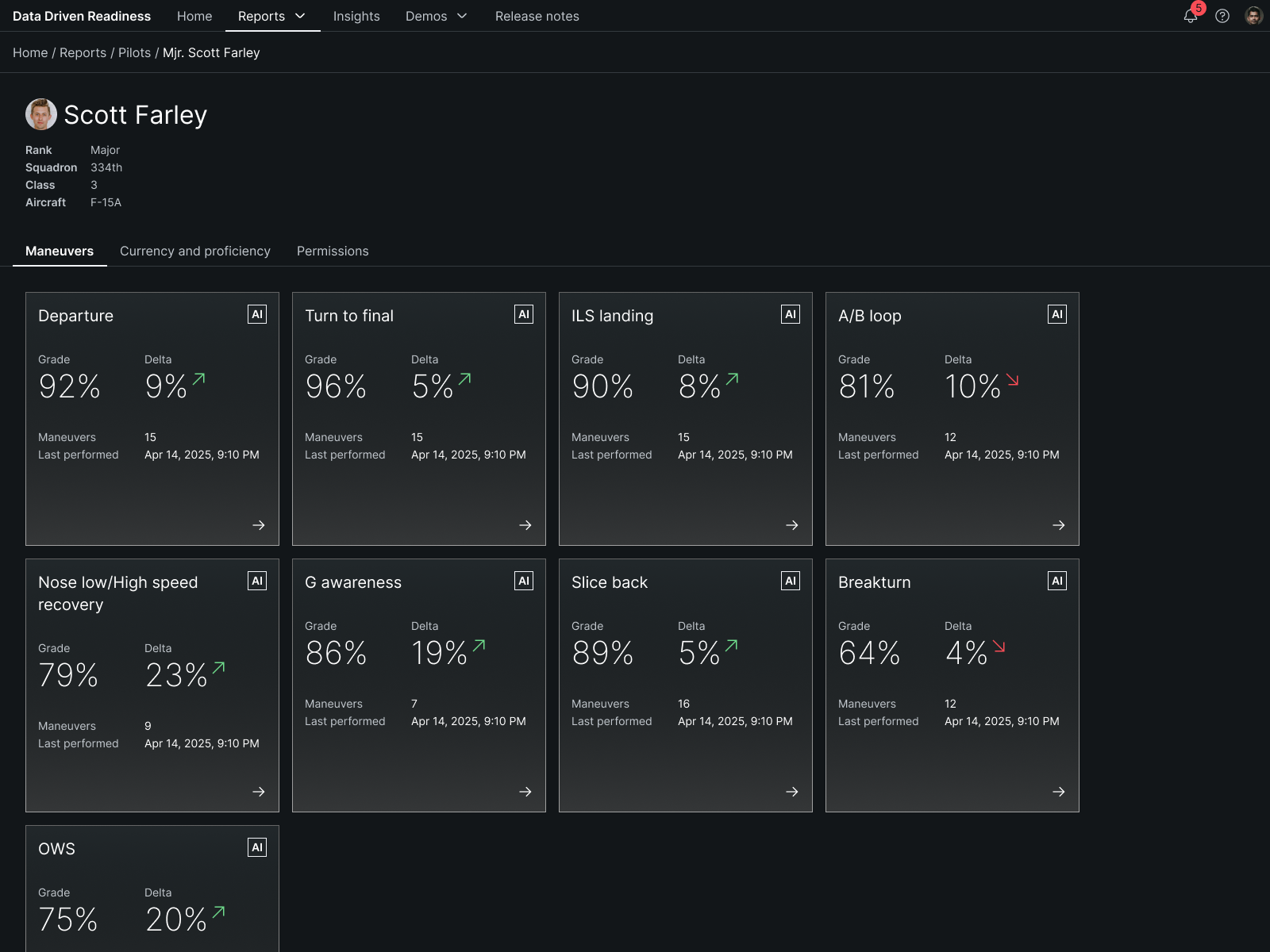

From this point we shifted the product priorities around trust and usability. A critical part of the work was adding visibility to AI within the user interface design for building trust and confidence. We redesigned the experience so that moments affected by AI have a clear indicator with explainability making it easier for pilots to cross-check using their own judgment.

The result was an AI-powered user experience that pilots can trust:

- Indicators for AI-generated content: Transparency with AI is key and the AI label is an accessible, interactive element that's present at every interface level.

- Having a consistent visual reference: Users need a universal and easy identifiable visual reference when they want to understand more about how AI is being used.

- Guiding users with explainability: Users need a way to understand how to access details about how an AI was built. By providing a way to explainability with the AI label, users have a recognizable location to learn more.

"One thing that AI is really good at is taking a repetitive process and performing it at fractions of the time that it would take a human."

Supercharging how pilots learn

I led short, focused research sessions with pilots and instructors to understand how they review flights, what slows down learning, and where confidence breaks.

Two distinct user types emerged: instructors who think in patterns and coaching, and trainees who want direct and unfiltered understanding of their own performance. Aligning both of these perspectives became central to the direction of the redesign.

We approached this redesign with one goal: make pilot performance insights feel clear, fair, and easy to act on without adding more work for people already operating at full speed.

We simplified performance scores into a simple pass or fail format that removed interpretation. Flight data that was once just numbers is now visualized directly on the timeline, giving pilots access to evaluate data points.

Empowering instructors in the classroom

Instead of feedback being scattered across personal notebooks we created a shared space for notes where pilots and instructors could tag teammates, add context, and continue discussions beyond the debrief, turning every flight into team learning experiences and not for self-reflection.

Making an impact

We transformed DDR from being difficult to use into a collaborative tool that pilots trust to evaluate and improve their performance, while still fitting into their demanding workflows. The improvements that made the biggest impact for pilots were:

- Quickly identify performance gaps through transparent grading

- Navigate flight simulations more efficiently with intuitive controls

- Collaborate with instructors with interactive notes

- Cross-evaluations with human analysis from the enhanced visualizations

"Hiring Chris brought us instant relaxation. Before working with Chris I didn’t realize how much it would take to do the research sitting down with pilots to better solve their problems."

Final thoughts

This project was as much about adapting design methods to constraints as it was about building a better tool.

- Adapt to user constraints: Pilots limited availability required leaner, flexible research methods

- Embrace the learning curve: The aviation industry is highly technical. Building domain knowledge gave me confidence to drive design decisions

- Organize research better: A dedicated repository would have made user research insights easier to share with stakeholders

- Bridge design and development: By learning GitHub workflows and contributing to CSS tokens, I accelerated implementation and improved team collaboration